Contents

What Model-Centric Infrastructure Looks Like

Why We Are Shifting from Models to Agents

What Agents Demand from Infrastructure

How to Use Encord to Build AI Infra for CV Agents

Key Takeaways

Encord Blog

From Models to Agents: How to Build Future-Ready AI Infrastructure

In the early days of computer vision, machine learning infrastructure was relatively straightforward. You collected a dataset, labeled it, trained a model, and deployed it. The process was linear and static because the models we were building didn’t need to adapt to changing environments.

However, as AI applications advance, the systems we're building are no longer just models that make predictions. They are agents that perceive, decide, act, and learn in the real world. As model performance continues to exponentially improve, the infrastructure needs to be optimized for dynamic, real-world feedback loops.

For high performance AI teams building for complex use cases, such as surgical robotics or autonomous driving, this future-ready infrastructure is crucial. Without it, these teams will not be able to deliver at speed and at scale, hurting their competitive edge in the market.

Let’s unpack what this shift really means, and why you need to rethink your infrastructure now, not after your next model hits a wall in production.

What Model-Centric Infrastructure Looks Like

In traditional ML workflows, the model was the center. Whereas surrounding infrastructure, like data collection, annotation tools, evaluation benchmarks, was all designed to feed the training process.

That stack typically looked like this:

- Collect a dataset (manually or from a fixed pipeline)

- Label it once

- Train a model

- Evaluate on a benchmark

- Deploy

But three things have changed:

- The tasks are getting harder – Models are being asked to understand context, multi-modal signals, temporal dynamics, and edge cases (ex: robotics applications)

- The environments are dynamic – Models are no longer just processing static inputs. They operate in real-world loops: in hospitals, warehouses, factories, and embedded applications.

- The cost of failure has gone up – It's not just about lower accuracy anymore. A brittle perception module in a surgical robot, or a misstep in a drone’s navigation agent, can mean real-world consequences.

Why We Are Shifting from Models to Agents

An agent isn’t just a model. It’s a system that:

- Perceives its environment (via CV, audio, sensor inputs, etc.)

- Decides what to do (based on learned policies or planning algorithms)

- Acts in the world (physical or digital)

- Learns from its outcomes

The key here is that agents learn from outcomes.

Agents don’t live in the world of fixed datasets and static benchmarks. They live in dynamic systems. And every decision they make produces new data, new edge cases, and new sources of feedback. That means your infrastructure can’t just support training. It has to support continuous improvement, or rather a feedback loop.

What Agents Demand from Infrastructure

Here’s what AI agents that are operating in real world, dynamic environment demand from training infra:

1. Feedback Loops

Rather than a stack with a one-way flow (data → model → prediction), agents generate continuous feedback. They need infrastructure that can ingest that feedback and use it to trigger re-training, relabeling, or re-evaluation.

2. Behavior-Driven Data Ops

The next critical datapoint isn't randomly sampled, it’s based on what the agent is doing wrong. The system needs to surface failure modes and edge cases in order to automatically route them into data pipelines.

3. Contextual Annotation Workflows

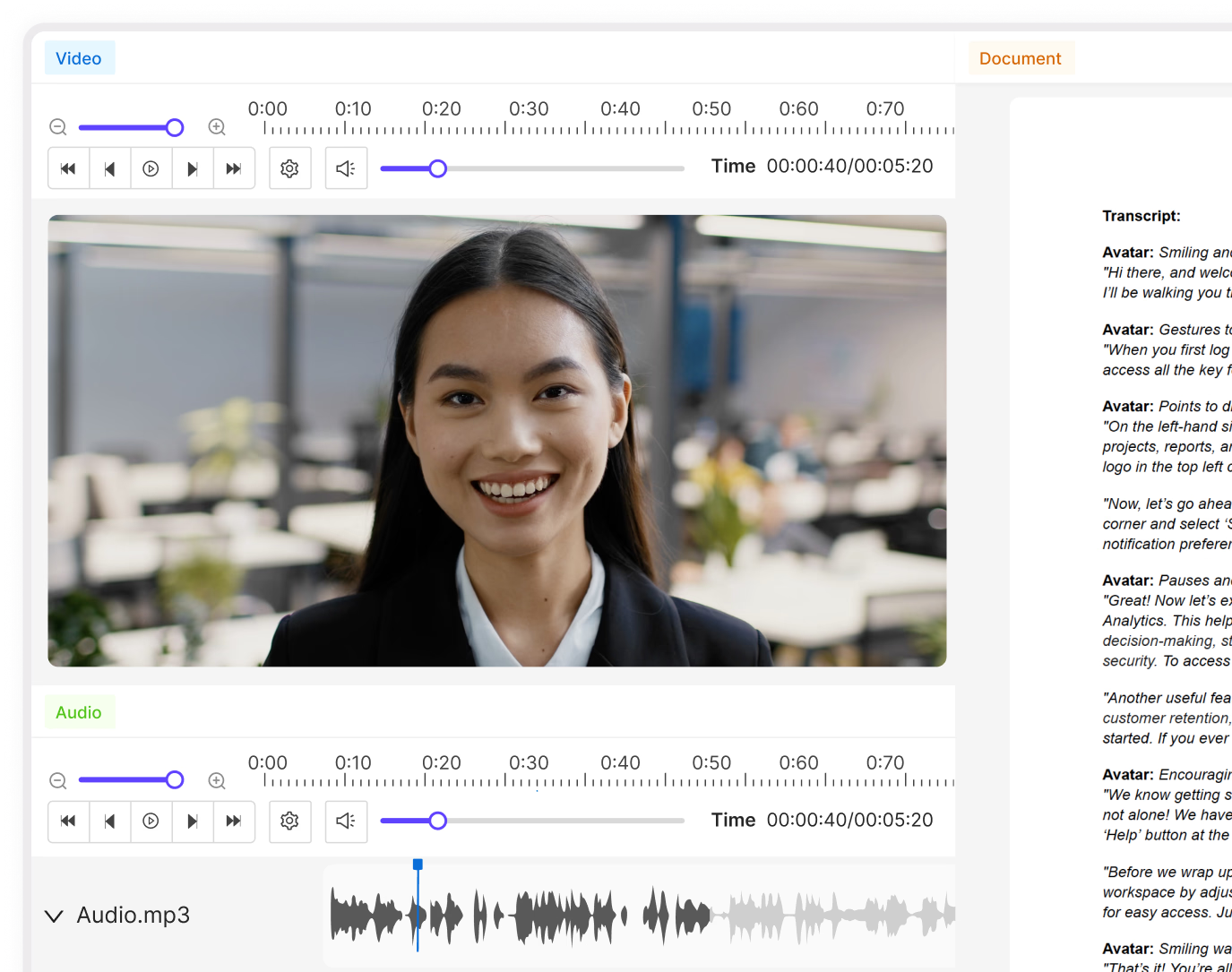

For agents operating in multimodal environments (e.g. surgical scenes, drone footage, or robotic arms), you need annotation systems that are aware of context. This is why a tool like Encord’s multilingual editor is helpful, allowing different views of a single object to be annotated simultaneously.

Encord HTIL workflow

4. Real-Time Evaluation & Monitoring

Where the real challenge with agents and complex models lie is when they are productionized. This is where failures and edge cases often come to the surface. Therefore, AI infra must be evaluated and monitored in real-world conditions.

5. Human-in-the-Loop, Where It Matters

Your human experts are expensive. Don’t waste them labeling random frames. Instead, design your workflows so that humans focus on critical decisions, edge-case adjudication, and behavior-guided corrections.

| Requirement | Legacy ML Infra | Future-Ready Infra (via Encord) |

| Feedback Integration | Manual, slow | Automated, real-time looped-in |

| Data Curation | Static, offline | Behavior- and performance-driven |

| Annotation Tooling | Flat, static, single-modal | Contextual, temporal, multi-modal |

| Evaluation | Benchmark-only | Continuous, in-situ |

| Human-in-the-Loop | Generic, high-lift | Targeted, outcome-aware |

| Retraining Triggers | Manual decision | Outcome-driven automation |

How to Use Encord to Build AI Infra for CV Agents

At Encord, we’re building the data layer for frontier AI teams. That means we’re not just another labeling tool, or a dataset management platform. We’re helping turn raw data, model outputs, agent behaviors, and human input into a cohesive system.

Let’s take some complex computer vision use cases to illustrate these points:

Closing the Feedback Loop

An AI-powered surgical assistant captures post-op feedback. That feedback is routed through Encord to identify mislabeled cases or new patterns, which are automatically prioritized for re-annotation and model update.

Surgical video ontology in Encord

Behavior-Based Data Routing

An autonomous warehouse robot team uses Encord to tag failure logs. These logs automatically trigger active learning workflows, so that the most impactful data gets labeled and reintroduced into training first.

Contextual, Domain-Aware Labeling

In computer vision for aerial drone surveillance, users annotate multi-frame sequences with temporal dependencies. Encord enables annotation with full temporal context and behavior tagging across frames.

Agricultural drone CV application

Dynamic Evaluation Metrics

Instead of relying on outdated benchmarks, users evaluate models live based on how agents perform in the real environment.

Why This Matters for AI/ML Leaders

If you're a CTO, Head of AI, or technical founder, this shift should be on your radar for one key reason: If your infrastructure is built for yesterday’s AI, you’ll spend the next 18 months patching it.

We’re seeing a growing split:

- Companies that invest in orchestration and feedback are accelerating.

- Companies still on static pipelines are drowning in tech debt and firefighting.

You don’t want to retrofit orchestration after your systems are in production. You want to build it in from the start, especially as agents become the dominant paradigm across CV and multi-modal AI.

The AI landscape is moving faster than most infrastructure can handle. Therefore, you need infrastructure that helps those models learn, adapt, and improve in the loop.

That is where Encord comes in:

- Build agent-aware data pipelines

- Annotate and evaluate in dynamic, context-rich environments

- Automate feedback integration and retraining triggers

- Maintain human oversight where it matters most

- Adapt infrastructure alongside AI advancement

Key Takeaways

The AI systems of tomorrow won’t just predict, they’ll act, adapt, and improve. They’ll live in the real world, not in your test set. And they’ll need infrastructure that can evolve with them.

If you’re leading an AI team at the frontier, now’s the time to modernize your infrastructure. Invest in feedback, automation, and behavioral intelligence.

Explore our products